Research Interests

My Path

I got into research through math and physics competitions in high school, which led me to studying electrical engineering at Sharif University. I've always been drawn to problems where theory meets practice, understanding not just how things work, but why.

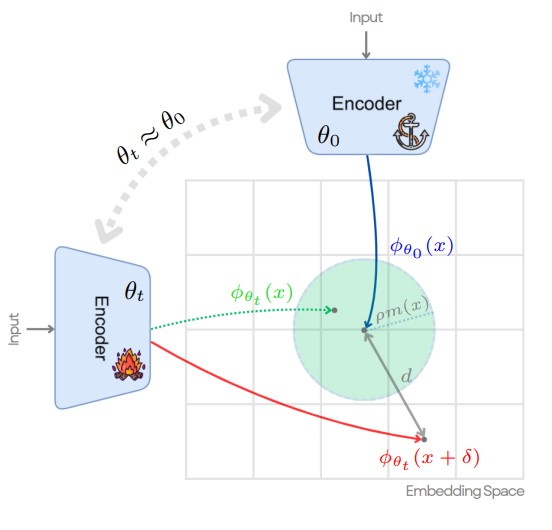

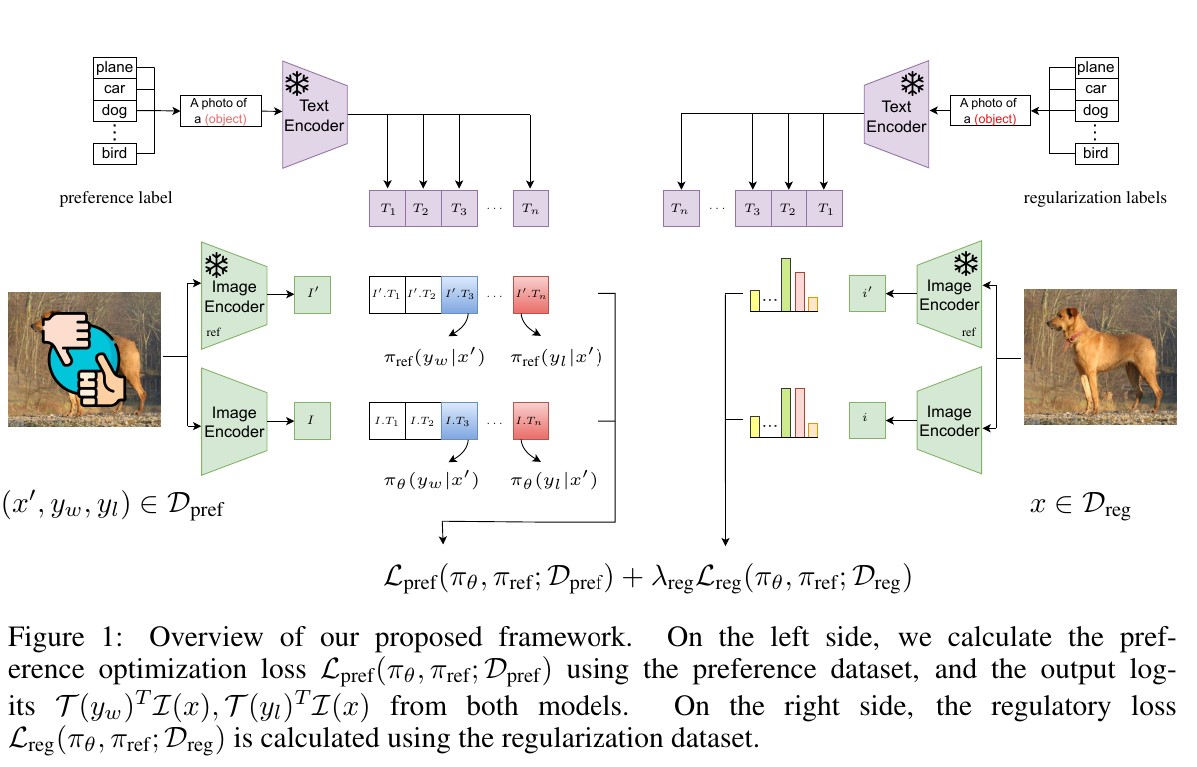

During undergrad, I worked with Professors Sajjad Amini and Moosavi-Dezfooli on the adversarial robustness of embedding models. At EPFL, I worked with Professor Michael Unser on designing robust, efficient, and theoretically 1-Lipschitz models for inverse problems. I also collaborated with Professor Arash Amini on various projects. These experiences shaped how I approach research.

I took many graduate-level courses during undergrad, which built my theoretical foundation in optimization, information theory, and probabilistic methods.

I've learned the most from working with others and teaching. Good ideas usually come from asking simple questions and being willing to explore where they lead.

Goals

I deeply believe in human ingenuity, and the potential of AI. I see research progress as coming in waves, each built on the evolution of theoretical ideas that enable the next breakthrough. In the long term, I aspire to contribute impactful research as a professor. I've always loved teaching and exploring new ideas, and I hope to create an environment where new ideas can meaningfully advance the field.

For now, I hope to contribute to open-source tools, code, and writing that make complex ideas more accessible; While exploring new directions that could contribute to the field.

Interests

- Mathematical foundations of machine learning and optimization

- Adversarial robustness and strategic behavior in learning systems

- Information theory and statistical learning, and statistical physics in machine learning

- Reinforcement learning and online decision-making

- Generative modelling, epecially vector field generative models (diffusion models, flow models, etc.)

Publications

Teaching

- Engineering Probability and Statistics — Teaching assistant: Designing projects and problem sets

- Engineering Mathematics — Teaching assistant: Holding practice sessions

- Linear Algebra — Teaching assistant: Holding practice sessions

- Signal Processing — Teaching assistant: Holding practice sessions

- Deep Learning — Teaching assistant: Designing course project, practice sessions

- Machine Learning — Head teaching assistant: Designing problem sets and project, Holding practice sessions, Managing the teaching assistant group, course homepage: https://ml-sut-amini.github.io/

Recordings of my teaching sessions and technical discussions are available here.

Coursework

- Graduate: Deep Learning, Graph Signal Processing, Game Theory, Information theory, statistics & learning, High Dimensional Probability, Deep Generative Models, Graphical Models

- Undergraduate: Linear Algebra, Signal Processing, Convex Optimization, Probability and Statistics, Signals and Systems