CV

This section includes my curriculum vitae. For a PDF version, see here.

Curriculum Vitae

Hi! I am Borna Khodabandeh, I am currently an independent researcher, and a prospective graduate student. I have received my B.Sc. in Electrical Engineering with a minor in Physics from Sharif University of Technology.

I aim to apply rigorous theoretical and mathematical approaches in crucial areas of machine learning, that have practical value. As I am still exploring, I am broadly interested in generative modelling, adversarial robustness and optimization, reinforcement learning, and understanding learning systems through the lens of information theory, statistics and optimization theory.

Research Interests

- Mathematical foundations of machine learning and optimization

- Adversarial robustness and strategic behavior in learning systems

- Information theory and statistical learning, and statistical physics in machine learning

- Reinforcement learning and online decision-making

- Generative modelling, epecially vector field generative models (diffusion models, flow models, etc.)

Education

Stanford University — Ph.D. in Electrical Engineering (Deferred Admission), Stanford, CA, USA (2026–present)

Sharif University of Technology — B.Sc. in Electrical Engineering, Minor in Physics, Tehran, Iran (2021–2025) — Overall GPA: 19.65/20, Major GPA: 19.93/20

Young Scholars Club (IPhO Training) — International Physics Olympiad Training

Research Experience

- Summer Internship, E3 Program (BIG | EPFL), Advised by Prof. Dr. Michael Unser (2024)

- Designed 1-Lipschitz-constrained (Parseval) convolutional operators and neural networks

- Applied to inverse problems and denoising with theoretical bounds on stability and robustness

- Reduced parameters via symmetry

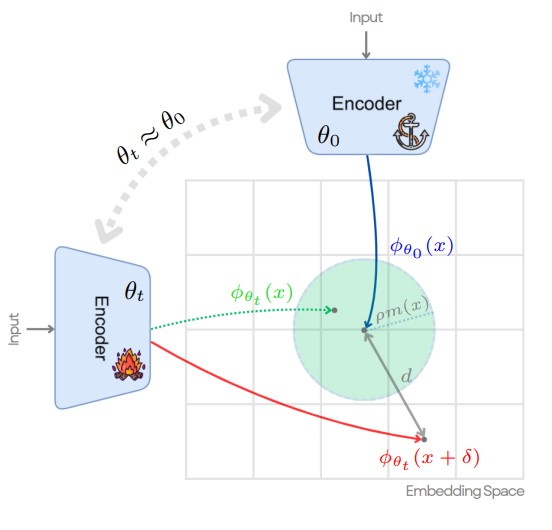

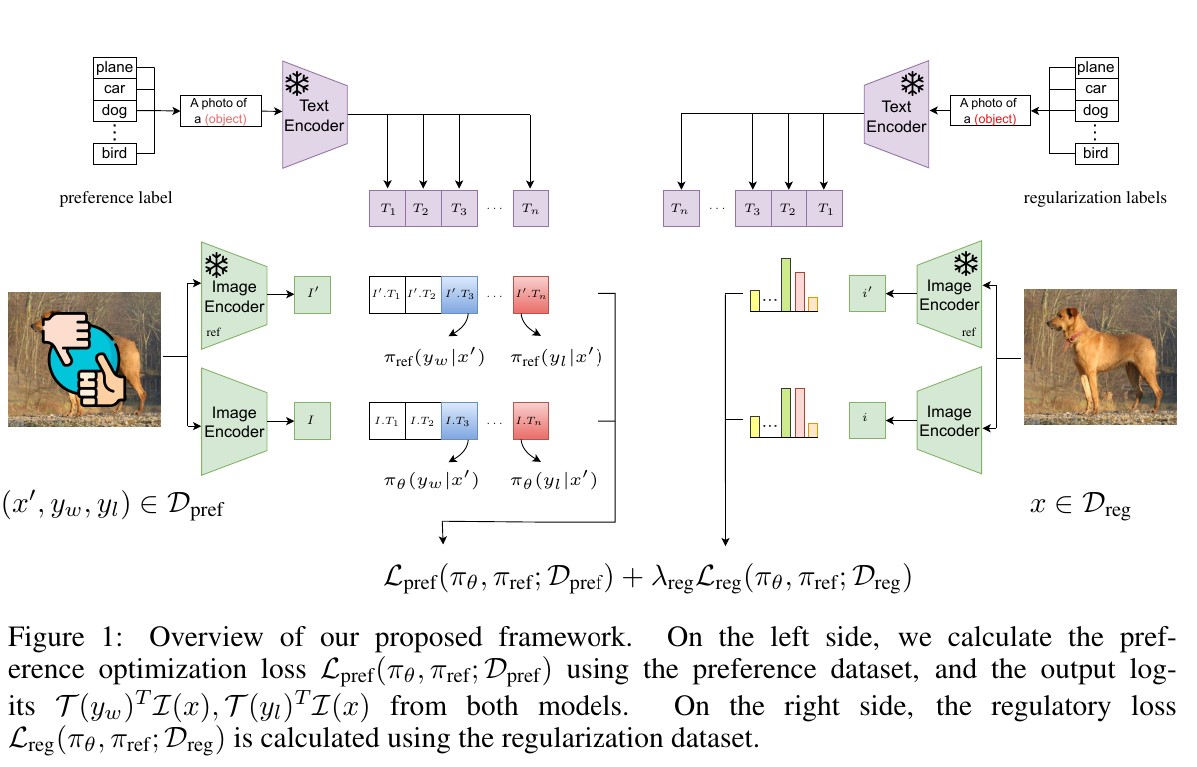

- Bachelor's Project — Adversarially Robust Embeddings in Contrastive Learning, Advised by Prof. Sajjad Amini (in collaboration with Prof. Moosavi-Dezfooli) (2024)

- For more information, see the Publications section.

- Study Sessions and Reading Groups

- Causality and bandit algorithms (with BAN at EPFL)

- Optimization on graphs and learning weights from smooth signals on Erdős–Rényi graphs

- Designing a chatbot for our universities department with Dr. Arash Amini.

- Exploring Tsallis-entropy–regularized MDPs for preference optimization in language models

- Exploring applying preference optimization to consistency models, see Project repository.

- Coordinated meetings and tracked project progress

Publications

Selected Course Projects

2025 — Phase transitions in LoRA (High Dimensional Probability (SUT)) — GitHub · Report · Slides:

Explained LoRA vs full FT via RMT; linked intruder dimensions to BBP transition.2024 — Information geometry (Information theory, statistics & learning (SUT)) — GitHub · Report · Slides:

Explored differential geometry in statistical learning; manifolds, divergences, NGD.2024 — Game theoretic network design (Game theory (SUT)) — GitHub:

Simulated stable matching and optimal selling mechanisms in network scenarios.2024 — GAN-BERT (Deep learning (SUT)) — GitHub:

Implemented GAN-BERT for detecting LLM-generated text and source model.

Teaching

- Engineering Probability and Statistics — Teaching assistant: Designing projects and problem sets

- Engineering Mathematics — Teaching assistant: Holding practice sessions

- Linear Algebra — Teaching assistant: Holding practice sessions

- Signal Processing — Teaching assistant: Holding practice sessions

- Deep Learning — Teaching assistant: Designing course project ; practice sessions

- Machine Learning — Head teaching assistant: Designing problem sets and project ; Holding practice sessions ; Managing the teaching assistant group ; course homepage: https://ml-sut-amini.github.io/

Recordings of my teaching sessions and technical discussions are available here.

Coursework

- Graduate: Deep Learning, Graph Signal Processing, Game Theory, Information theory, statistics & learning, High Dimensional Probability, Deep Generative Models, Graphical Models

- Undergraduate: Linear Algebra, Signal Processing, Convex Optimization, Probability and Statistics, Signals and Systems

Activities

- Participant in the 24th ADFOCS (Max Planck), Algorithmic Game Theory

- Competitor in international math contests (WMTC 2016, IMC 2018, WMC 2018)

- Physics Olympiad tutor at top high schools

- Part of a research group of; organized meetings and tracked project progress

Skills

- Technical: Advanced mathematics (High dimentional probability and statistics, stochastic calculus, differential equations); Signal processing, information theory, Machine learning and Deep learning

- Tools: PyTorch, TensorFlow, OpenCV, scikit-learn, NumPy, pandas, matplotlib, DSPy, einops

- Programming languages: Python, Java, C/C++, Go, MATLAB

- Languages: Persian (native); English (advanced; TOEFL iBT 113/120 (Reading 30, Listening 29, Speaking 25, Writing 29)); French (basic)

- Misc: Problem-solving, collaboration, communication, teaching